Human intelligence has been responsible for countless innovations that have shaped modern life: cell phones, computers, digital music and social media have reshaped what it means to exist on planet Earth. Yet despite these innovations that have driven society forward, can humans still consider themselves the smartest beings in the room? Artificial Intelligence (AI), simply defined as the science of creating machines that think like humans, has penetrated the daily lives of people around the globe for better or worse, making tasks like driving, staying healthy, communicating and learning easier. Open AI’s launch of overnight sensation Chat GPT in 2022—which onboarded 100 million users faster than any app in history—is projected to rake in a billion dollars in 2024, making Open AI a prime example of how the thirst for innovation can clash with the technology (tech) nonprofit mission to create what is best for humanity.

Humans are already paying a high price for these innovations. AI relies on a wealth of personal data to help people live more efficient lives, and privacy is the area perceived as the most negatively impacted by the current implications of AI, followed by equality, free expression, association, assembly and work. AI has access to individual’s financial information, credit scores, health information, and human resources data. It can also speak in decidedly human ways, whether via chatbots or digital assistants such as Siri and Alexa. According to a 2023 study by the Pew Research Center, more than half of Americans are concerned about the role AI plays in their daily lives, even though 28 percent say they use it daily. Unsurprisingly, most people want to ensure that humans maintain control of tech innovations, as well as personal information. Despite this apprehensiveness, on the whole, humans benefit from AI by allowing machines to handle tasks more quickly—especially in the fields of healthcare and diagnostics. Amit Ray, a pioneer of emotional intelligence in the AI movement, says that compassionate AI “drives the machines to cry for those who are in pain and suffering, to find the shortest path to minimise the pain and suffering of others.”

But while AI has made many aspects of modern life easier, it could be on track to acquire rights of its own, and even some form of sentience, within the next two decades. Among other concerns, this would pose major challenges for the developers and humans who work alongside AI-generated content. Globally, 75 of 176 countries use AI for surveillance and border management. The sense that someone is always watching will only increase as the technology becomes more widespread.

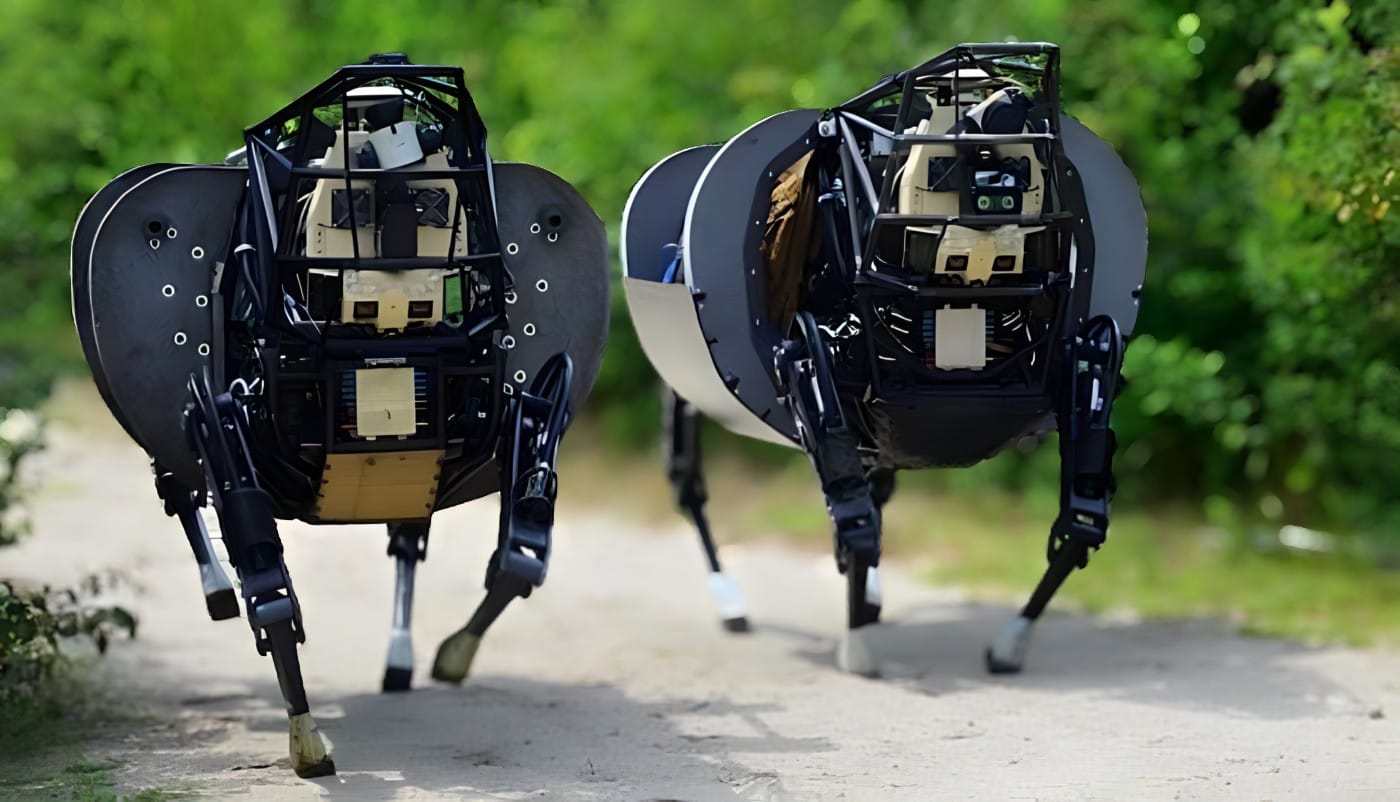

Reliability and ethics will become more of a concern on the battlefield and in a humanitarian context too. Like gunpowder and the atomic bomb, the weaponisation of AI is poised to change warfare. Armed drones trained for warfare and ‘slaughterbots’ can decide to take human lives as they wish, operating autonomously. Even drones intended for aid can negatively impact populations in need if they are not objectively identified.“[With] the changes in the speed and the way we fight [a] war, you can see, from a military operations perspective, the reasons for [using AI],” says Toby Walsh, Laureate Fellow and Scientia Professor of Artificial Intelligence at the Department of Computer Science and Engineering at the University of South Wales. “But there’s the worry of a moral, legal, and technological perspective of the world we’ll be in. Previously, if you wanted to do harm, you’d have to find yourself an army of people, equip and feed them, and persuade them to do evil. Now you won’t need to persuade anyone—you need just one programmer to be able to do that."

Some apprehension about what the future holds with AI in control is sensible. What might a world where AI and humans coexist look like?

Technology and human rights: a brief timeline

The collective embrace of artificial intelligence requires two things: a quick download of its history, and a willingness to grow with it. AI was born in the 1950s thanks to Alan Turing, an English mathematician famous for his work developing the first modern computers, and John McCarthy, a Dartmouth graduate and computer scientist. Though its definition changes with each innovation, AI can be divided into two categories: knowledge-based and statistical learning systems. Knowledge-based systems, also called ‘expert systems,’ are designed to replace or help human experts solve problems, while the latter sort through and use data to make predictions. It’s easy to see the allure of both. Machines can accelerate human achievement by helping people complete tasks more efficiently and eliminating the grunt work involved in analysis, allowing people to focus on more complex tasks.

However, there’s a price to pay for an increase in collective power. The negative human rights impacts of AI are well-documented. Facial recognition systems, while helpful for improved contactless security and to help law enforcement find missing people, are a huge threat to individual privacy. Some cities, like San Francisco, California and Cambridge, Massachusetts, have forbidden law enforcement from using live facial recognition software on the grounds that it makes people feel watched at every turn. It can also lead to false criminal convictions based on inferences made about someone’s ethnic origin. Algorithm bias can lead to some groups, such as white men, receiving preferential treatment while others, like women and people of colour, are overlooked. Job hunting isn’t the only area where discrimination takes place. It also occurs in healthcare. A 2019 ACLU study found that Black patients had to be much sicker than White patients to receive treatment, a determination based on an algorithm that was trained on historically biased healthcare data that claimed Black patients had less to spend on healthcare compared to White patients. An AI tool trained on medical images learned how to report a patient’s race, leading to reduced care in communities of colour with no oversight or regulation. This type of ‘medical racism’ can also be blamed for making extreme cuts to the care of people with disabilities.

“Frequently, it’s an unintended consequence,” says Walsh. “No one was intending harm to be done, but the right questions weren’t asked at the right time. We see a lot of white male tech pros developing technology, and important questions don’t get asked. Perhaps they would have been asked had there been better diversity in the room when the product was being developed.”

Documents do exist to address the negative impact of AI on society and protect human rights. The Toronto Declaration, issued by Amnesty International in 2018, advocates for using the technology responsibly, promotes non-discrimination in areas like employment, equality before the law and freedom of expression, and promises to hold violators responsible for the adverse treatment of those affected by it. Its measures include compensation, sanctions against those responsible and guarantees not to repeat the offence. Similarly, Global Affairs Canada’s Freedom Online Coalition Joint Statement on Artificial Intelligence and Human Rights discusses the implications of AI on freedoms both online and offline, with clear calls to action to preserve privacy, the right to free expression, association and peaceful assembly.

AI in improving human life

Because AI reduces the drudgery involved in tasks like data sorting and analysis, it may be seen as most helpful to humans in a technical capacity. However, AI also shines in the area of healthcare and diagnostics by improving accuracy and reducing human error. Machine involvement in healthcare can be traced back to the 1970s when the goal was codifying expert knowledge. To date, it has been especially helpful in diagnosing and treating skin, lung and brain cancer, autism and Alzheimer's disease.

It follows that the downside of AI in healthcare presents vast potential for personal and genetic data to be misused. Period tracking apps, DNA testing kits, and other information collected in the name of clinical research are common examples. Research in the British Medical Journal analysed more than 20,000 mobile health apps on the Google Play store, finding that 88 percent of these apps tracked and collected personal data about health symptoms on mobile devices that could be passed on to a third party. While this was found in 3.9 percent of cases, it is a violation that could give some users pause.

If AI can literally extend people’s lives by diagnosing health conditions faster than a doctor could, it can also provide psychological help. AI-assisted content enforcement would reduce the post-traumatic stress and general psychological toll on humans tasked with moderating the worst of online content—child porn, graphic violence, and the like—a positive human rights impact for those often forgotten about in the digital age. AI also has the potential to reinvent psychiatry. Unlike chatting with a traditional therapist or taking medication, which can be cost-prohibitive or even unavailable, AI can analyse a patient’s medical records, social media posts, browsing history and data from wearable devices. This could help traditional psychiatrists track a patient’s depressive tendencies, sleep patterns and energy levels, resulting in more accurate advice or treatment plans.

AI also has the potential to streamline creative fields such as publishing. Businesses can use AI to scan online content to ensure copyrighted material is properly credited, leading to more efficient and less expensive content licensing. But while AI is hugely beneficial in areas like copyright infringement, it cannot detect nuances in human speech. Bias can be replicated, which could result in limits on freedom of expression. Therefore, humans will always be needed to lend accountability to life-changing decisions despite inherent biases.

“When we get to the high stakes decisions, where we’re talking about taking away people’s liberties, denying them visas, or giving them life sentences, humans should always be involved,” says Walsh. “Even if humans are proven to be worse than machines, less perfect, I’m prepared to put up with more errors that humans make. I don’t want to wake up in the 1984/Clockwork Orange world that it would be if a computer was making those decisions.”

When AI acquires rights: challenges and consequences

Eighty-two percent of scientists polled by Scientists for Global Responsibility believe that humans existing alongside AI in a dystopian future is possible. Preventing such a situation, and addressing the current impact of AI, is challenging because the systems can often be unfair. In the criminal justice system, automated risk assessment scoring can help reduce the number of incarcerations, while also increasing mistaken ones. Governments and businesses that utilise AI need to ensure that it does not replicate biases learned from human decision-makers. With the uncertainty and complexity of how AI will develop, and what defines ‘sentience’, failures in transparency and accountability in machine AI could even lead it to have questionable morals, creating significant ethical and legal dilemmas.

Similarly, AI’s role in the financial system may lead to complications for personal finances. Credit score algorithms, which currently rely on voluntary reporting from banks, utility companies and businesses, do not necessarily include all the pertinent information about an individual. This can lead to an incomplete picture of a person’s financial position, potentially trapping them in a poor credit score cycle. AI-generated credit scores can foster financial inclusion because they factor in more data, but they can also make snap judgements based on a person’s digital ‘tone’—which can include anything from typing in all caps to analysing the people in their social network. When this happens, the algorithm can have a chilling effect on a person’s behaviour, causing them to self-censor their daily social media habits—at the expense of their personal autonomy and well-being. Central banks have recognised the urgency of staying ahead of AI’s decision-making powers.

According to the International Monetary Fund, financial institutions worldwide have pledged to double their spending on AI by 2027. They plan to prioritise machine learning tools and robots that can assist with risk management, prospecting and fraud prevention, as well as minimise the training of AI on outdated data that can create a negative feedback loop. Though it requires a large amount of oversight, the financial world is one place where AI with rights can help foster more inclusion and equity. It can identify and welcome individuals who have no bank accounts or financial history into the commercial banking system, as well as look for data bias and lack of transparency when assessing borrower loans. With proper oversight, AI can also play a proactive role in providing equitable financial services to women, reducing bias and assisting impact investors to serve marginalised groups. According to the Center for Financial Inclusion, women in North America and Europe have received higher pricing, lower credit limits and limited borrowing capabilities, all the result of AI trained on outdated data sets. Researchers also found that Latinx and African American borrowers were charged 7.9 and 3.6 basis points more on interest rates for mortgages and financing. Though these oversights are disheartening, there are benefits of using AI in finance to save time and offer peace of mind. The use of AI can reduce the need to work in tandem with a financial advisor on some tasks, assist with fraud prevention and detection, and provide support for human decisions at every stage of the planning process.

With all the power entrusted to machines, who is responsible for its regulation? Currently, there are two schools of thought on how to regulate AI. The first is risk-based, which requires self-regulation by developers, leaving much up to the private sector. The European Artificial Intelligence Act, the first established by a major regulator to ensure the technology is used safely and responsibly, assigns AI to three risk categories depending on the danger posed by them: high, limited and low-risk applications. In the latter two categories, users can opt out of or make informed decisions about using AI depending on their comfort level and privacy tolerance. Applications in the ‘high’ category are banned if they create unnecessary risk. Another possible route would be embedding human rights in the entire life cycle of AI, starting from the collection of data to the development and deployment of tools that use it and listening to harms experienced by at-risk groups, including women, minorities, and other marginalised individuals. Special attention could be paid to abuse of power in law enforcement, justice and financial services.

The European Artificial Intelligence Act would require transparency around risk management from the scientific community and industries that use AI and would ban systems from manipulating human behaviour, including emotion-recognition technology and biometric identification in public. In a similarly protective fashion, AI chatbots would be required to inform users that they are interacting with a machine. Violators of these rules could be fined 35 million EUR, or seven percent of global annual turnover, whichever is higher.

Are humans doomed?

Despite the innate tendency of humans to be innovators obsessed with the latest technology, it’s unlikely humans are headed for a future where machines will destroy them. Creativity, warmth, empathy and experience are qualities that can’t be replicated, no matter how advanced the smartest robot becomes.

“Great books talk about life lost and love, and all the things that humans experience,” says Walsh. “Machines are never going to be able to speak about those things, because they'll never fall in love, lose a loved one, or have to contemplate their mortality. Those are the themes that great literature speaks to. They're uniquely human experiences.”

And yet, AI assists with the drudgery in daily life, and people are forced to adapt accordingly. Thanks to AI-assisted recruitment, companies can and will use algorithms to narrow down a candidate pool by word choice, inflection, facial tics and other emotions. Similar to AI-based credit score monitoring where a person can be judged by their spending habits, job applicants could grow to expect unfair treatment in recruitment due to AI-learned recruitment bias. Educators also face a double-edged sword when using AI in the classroom. AI-assisted grading on standardised tests can encourage students to write more deliberately and give them an incentive to improve, while traditional test scoring is still at the mercy of educators. By using AI to complete necessary but routine tasks, teachers would be free to assist students in deeper ways. Individualised attention will always be helpful to students to learn and improve their skills.

Teaching AI literacy through public awareness and education will reduce the fear of the unknown. Accountability must be required from tech giants, rather than allowing a smoke-and-mirrors approach when introducing innovations to the masses. While this might not be an issue for students growing up with AI as a modern study assistant, one of the most challenging aspects of AI will be ensuring that vulnerable populations are not left to adapt. Deploying AI with a human rights-first mindset can make it safer. Apps that pose human rights violations can be banned. United Nations Educational, Scientific and Cultural Organization’s AI educational course, free in 20 languages, teaches individuals to use AI in a way that respects their rights, as well as to protect themselves from private companies that aren’t held responsible for their actions. Additionally, it’s important to embed human rights principles in the life cycle of AI development from beginning to end. Placing limits on AI through regulation and governance at all points of the process, from the selection of data to deployments of services, would create greater transparency and foster an environment where AI potential can thrive.

Regardless of the level of control AI has over people in the years to come, the experience of being alive is unique to each individual. “AI is one of the most important scientific questions of the century”, says Walsh. “Because it may shed some light on what it means to be us. I think nothing becomes a more profound question to ask than that one.”